Comment Consistency Detection Algorithm Based on Code Change History

DOI:

https://doi.org/10.62677/IJETAA.2506137Keywords:

Code comment consistency, Software evolution, Version control analysis, Natural Language Processing, Technical debt managementAbstract

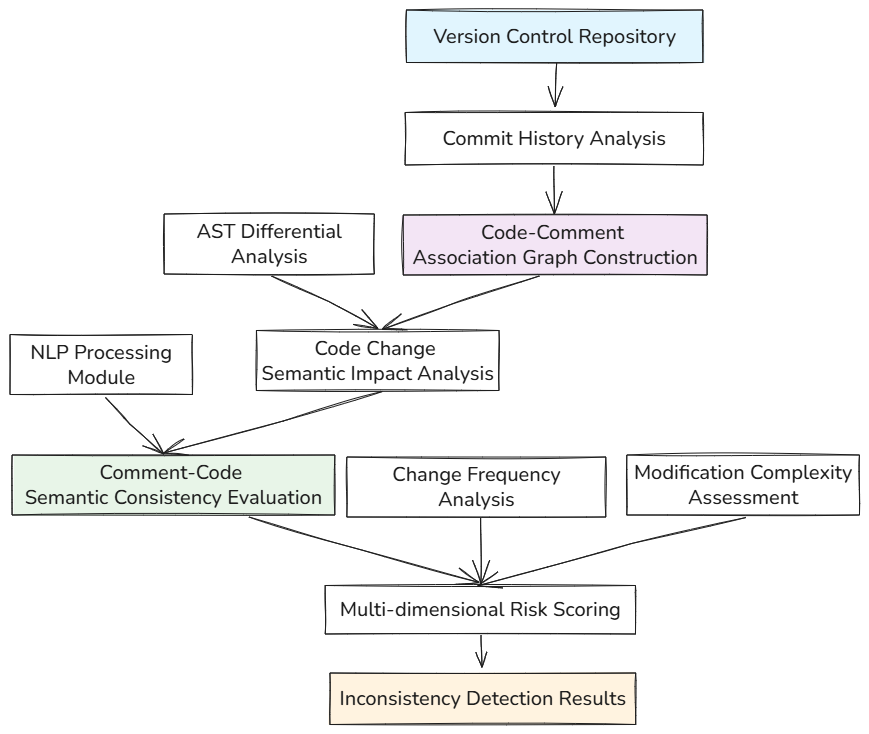

During software evolution, frequent code modifications often lead to inconsistencies between comments and actual code logic, creating technical debt and increasing maintenance costs. Existing comment consistency detection methods primarily rely on static analysis and lack systematic analysis of code evolution history, making it difficult to accurately identify outdated comment issues caused by code changes. This paper proposes a comment consistency detection algorithm based on code change history that identifies potential inconsistencies where code has been modified but comments remain unchanged by analyzing commit records in version control systems. The algorithm first constructs a code-comment association graph, establishing mapping relationships between functions, classes, variables, and their corresponding comments. Next, it detects the semantic impact scope of code changes using differential algorithms to determine whether related comments remain valid. It then employs natural language processing techniques to calculate semantic similarity between comment content and modified code. Finally, it combines factors such as change frequency and modification complexity to compute consistency risk scores. Validation on five large-scale open-source projects demonstrates that the algorithm can accurately identify 89.2% of comment inconsistency issues with a false positive rate of only 5.9% and a recall rate of 91.9%, significantly outperforming existing baseline methods and providing effective technical support for automated code quality management.

Downloads

References

P. Rani, "Speculative Analysis for Quality Assessment of Code Comments," in 2021 IEEE/ACM 43rd International Conference on Software Engineering: Companion Proceedings (ICSE-Companion), IEEE, 2021, pp. 299-303.

A. Sedaghatbaf, M. H. Moghadam, and M. Saadatmand, "Automated performance testing based on active deep learning," in 2021 IEEE/ACM International Conference on Automation of Software Test (AST), IEEE, May 2021, pp. 11-19.

A. Torfi, R. A. Shirvani, Y. Keneshloo, N. Tavaf, and E. A. Fox, "Natural language processing advancements by deep learning: A survey," arXiv preprint arXiv:2003.01200, 2020.

B. Min, H. Ross, E. Sulem, A. P. B. Veyseh, T. H. Nguyen, O. Sainz, et al., "Recent advances in natural language processing via large pre-trained language models: A survey," ACM Computing Surveys, vol. 56, no. 2, pp. 1-40, 2023.

H. K. Dam, T. Tran, T. Pham, S. W. Ng, J. Grundy, and A. Ghose, "Automatic feature learning for predicting vulnerable software components," IEEE Transactions on Software Engineering, vol. 47, no. 1, pp. 67-85, 2018.

S. Panthaplackel, J. J. Li, M. Gligoric, and R. J. Mooney, "Deep just-in-time inconsistency detection between comments and source code," in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, 2021, pp. 427-435.

X. Hu, X. Xia, D. Lo, Z. Wan, Q. Chen, and T. Zimmermann, "Practitioners' expectations on automated code comment generation," in Proceedings of the 44th international conference on software engineering, May 2022, pp. 1693-1705.

T. Steiner and R. Zhang, "Code Comment Inconsistency Detection with BERT and Longformer," arXiv preprint arXiv:2207.14444, 2022.

P. Rani, A. Blasi, N. Stulova, S. Panichella, A. Gorla, and O. Nierstrasz, "A decade of code comment quality assessment: A systematic literature review," Journal of Systems and Software, vol. 195, p. 111515, 2023.

Z. Xu, S. Guo, Y. Wang, R. Chen, H. Li, X. Li, and H. Jiang, "Code comment inconsistency detection based on confidence learning," IEEE Transactions on Software Engineering, vol. 50, no. 3, pp. 598-617, 2024.

L. Chen and S. Hayashi, "Impact of change granularity in refactoring detection," in Proceedings of the 30th IEEE/ACM International Conference on Program Comprehension, May 2022, pp. 565-569.

R. Degiovanni, F. Molina, G. Regis, and N. Aguirre, "Specification Inference for Evolving Systems," arXiv preprint arXiv:2301.12403, 2023.

A. J. Molnar and S. Motogna, "An exploration of technical debt over the lifetime of open-source software," in International Conference on Evaluation of Novel Approaches to Software Engineering, Springer Nature Switzerland, April 2022, pp. 292-314.

Z. Zhang, C. Chen, B. Liu, C. Liao, Z. Gong, H. Yu, J. Li, and R. Wang, "Unifying the Perspectives of NLP and Software Engineering: A Survey on Language Models for Code," arXiv preprint arXiv:2311.07989, 2023.

D. Khurana, A. Koli, K. Khatter, and S. Singh, "Natural language processing: state of the art, current trends and challenges," Multimedia tools and applications, vol. 82, no. 3, pp. 3713-3744, 2023.

G. D. S. Leite, R. E. P. Vieira, L. Cerqueira, R. S. P. Maciel, S. Freire, and M. Mendonça, "Technical Debt Management in Agile Software Development: A Systematic Mapping Study," in Proceedings of the XXIII Brazilian Symposium on Software Quality, November 2024, pp. 309-320.

M. Borg, "Requirements on Technical Debt: Dare to Specify Them!" IEEE Software, vol. 40, no. 2, pp. 8-12, March 2023.

A. Tornhill and M. Borg, "Code Red: The Business Impact of Code Quality - A Quantitative Study of 39 Proprietary Production Codebases," in Proceedings of the 5th International Conference on Technical Debt, 2023, pp. 11-20.

D. Vijayasree, N. S. Roopa, and A. Arun, "A review on the process of automated software testing," arXiv preprint arXiv:2209.03069, 2022.

Y. Huang, Y. Chen, X. Chen, and X. Zhou, "Are your comments outdated? Toward automatically detecting code-comment consistency," Journal of Software: Evolution and Process, vol. 37, no. 1, p. e2718, 2025.

S. Killivalavan and D. Thenmozhi, "Enhancing Code Annotation Reliability: Generative AI's Role in Comment Quality Assessment Models," arXiv preprint arXiv:2410.22323, 2024.

S.-C. Necula, F. Dumitriu, and V. Greavu-Șerban, "A Systematic Literature Review on Using Natural Language Processing in Software Requirements Engineering," Electronics, vol. 13, no. 11, article 2055, 2024.

M. Baqar and R. Khanda, "The Future of Software Testing: AI-Powered Test Case Generation and Validation," in Intelligent Computing-Proceedings of the Computing Conference, Springer Nature Switzerland, June 2025, pp. 276-300.

J. L. Guo, J. P. Steghöfer, A. Vogelsang, and J. Cleland-Huang, "Natural language processing for requirements traceability," in Handbook on Natural Language Processing for Requirements Engineering, Springer Nature Switzerland, 2025, pp. 89-116.

Downloads

Published

Issue

Section

Categories

License

Copyright (c) 2025 Wei Zhu (Author)

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.